How Cloudflare Achieved 55 Million Requests per Second with Just 15 PostgreSQL Clusters! 💻

Table of contents

- PostgreSQL Scalability: The Core 🚀

- Resource Usage Optimization with PgBouncer 🔄

- Thundering Herd Problem Solved! 🐘

- Performance Boost with Bare Metal Servers and HAProxy ⚙️

- Congestion Avoidance Algorithm for Concurrency 🚧

- Ordering Queries Strategically with Priority Queues 📊

- High Availability with Stolon Cluster Manager 🌐

- Conclusion 🌈

- Connect with Me on social media 📲

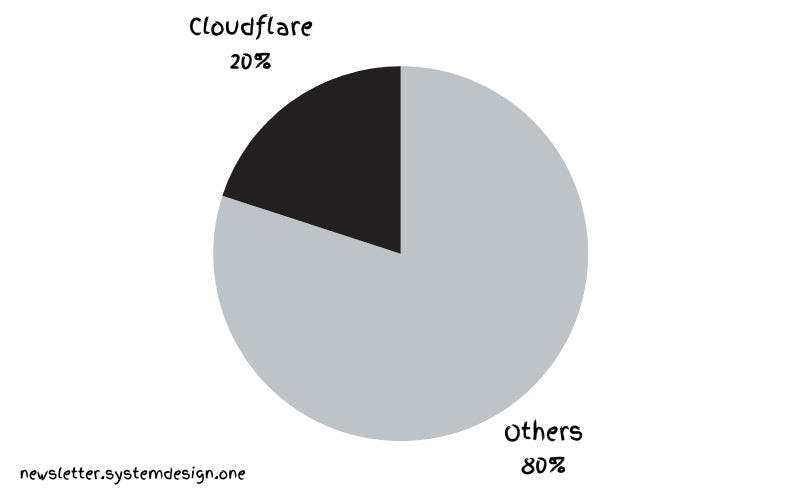

In the vast landscape of the internet, Cloudflare emerged in July 2009, founded by a group of visionaries with the goal of making the internet faster and more reliable. The challenges they faced were immense, but their growth was nothing short of spectacular. Fast forward to today, and Cloudflare serves a whopping 20% of the internet’s traffic, handling a staggering 55 million HTTP requests per second. The most incredible part? They achieved this feat with only 15 PostgreSQL clusters. Let’s dive into the magic behind this impressive system design!

PostgreSQL Scalability: The Core 🚀

Resource Usage Optimization with PgBouncer 🔄

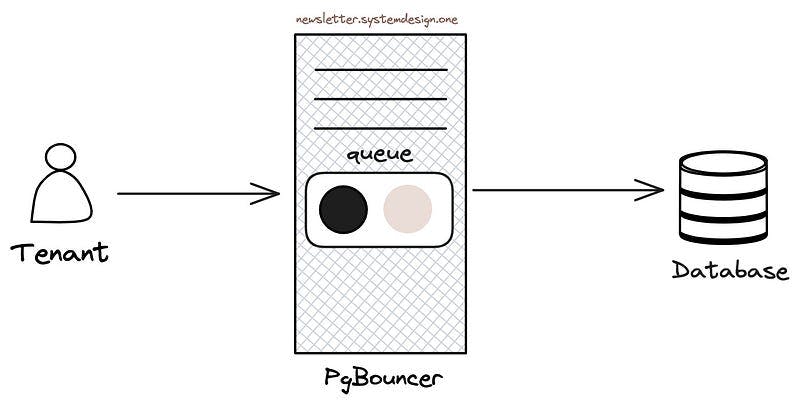

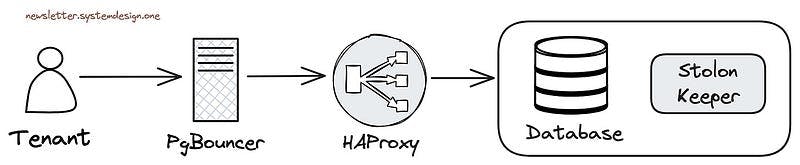

Handling Postgres connections efficiently is crucial, and Cloudflare uses PgBouncer as a TCP proxy to manage a pool of connections to Postgres.

This not only prevents connection starvation but also tackles the challenge of diverse workloads from different tenants within a cluster.

Thundering Herd Problem Solved! 🐘

The infamous Thundering Herd Problem, where many clients query a server concurrently, was addressed by Cloudflare using PgBouncer. It smartly throttles the number of Postgres connections created by a specific tenant, preventing degradation of database performance during high traffic.

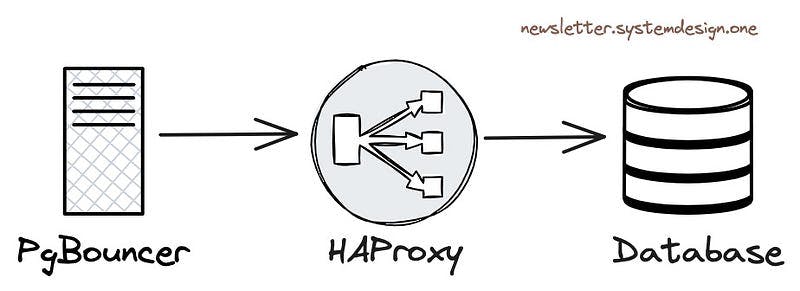

Performance Boost with Bare Metal Servers and HAProxy ⚙️

Cloudflare opts for bare metal servers without virtualization, ensuring high performance. They leverage HAProxy as an L4 load balancer, distributing traffic across primary and secondary database read replicas, providing a robust solution for performance enhancement.

Congestion Avoidance Algorithm for Concurrency 🚧

To manage concurrent queries and avoid performance degradation, Cloudflare employs the TCP Vegas congestion avoidance algorithm.

This algorithm samples each tenant’s transaction round-trip time to Postgres, dynamically adjusting the connection pool size to throttle traffic before resource starvation occurs.

Ordering Queries Strategically with Priority Queues 📊

Cloudflare tackles query latency by ranking queries at the PgBouncer layer using queues based on historical resource consumption.

Enabling priority queuing only during peak traffic ensures that queries needing more resources are handled efficiently without causing resource starvation.

High Availability with Stolon Cluster Manager 🌐

Ensuring high availability is a top priority for Cloudflare. They employ the Stolon cluster manager, replicating data across Postgres instances, and performing failovers seamlessly in peak traffic. With data replication across regions and proactive network testing, Cloudflare ensures a robust and resilient system.

Conclusion 🌈

Cloudflare’s journey to handling 55 million requests per second with just 15 PostgreSQL clusters is a testament to their ingenious system design. From smart connection pooling to tackling concurrency and ensuring high availability, they’ve navigated the complexities of scaling with finesse. Subscribe to our newsletter for more simplified case studies and unravel the secrets behind the tech giants’ success! 🚀🔍

Connect with Me on social media 📲

🐦 Follow me on Twitter: devangtomar7

🔗 Connect with me on LinkedIn: devangtomar

📷 Check out my Instagram: be_ayushmann

Ⓜ️ Checkout my blogs on Medium: Devang Tomar

#️⃣ Checkout my blogs on Hashnode: devangtomar

🧑💻 Checkout my blogs on Dev.to: devangtomar